Hold Me Closer

User Research & Quality Assurance for mobile game development

Overview

As part of my education, I spent my 9th semester at DADIU, and served as User Research and Quality Assurance Manager for our development team. Together we developed 2 minigames and our graduation game, which was released for Android and iOS.

I was educated by industry professionals from IO Interactive, Ubisoft and others, in UR and QA methodologies. I learned a great deal from this project.

Role

User Research & QA Manager

User Research, User Testing, Target Audience Research, Bug Tracking and Reporting

September 2019 - December 2019

Background

For my 9th semester, we had the option to take part in an internship programme at DADIU, the National Academy for Digital Interactive Entertainment. The program is all about game development, and my role was QA&UR Manager in our team.

This role was a natual extension of my studies, which focused on research and usability, and I learned some valuable skills from this program. I was part of the Lead Team, and I worked closely with the Project Manager, Game Director, Lead Programmer and Game Designers. I executed over the various departments, acting as a bridge between them, as well as giving voice to our target audience to make sure our game met user expectations.

What I achieved

I was responsible for testing and reporting any bugs I encountered in our game, making sure it was as error free as possible. This was done with close collaboration wih the programmers of our team. My main duty as UR Manager was to connect the players to the development team to make sure that the game's design reflects their understanding of the topic and concept of the game, and later on I used their input to locate problem areas of the game that needed revisiting from the dev team to make the game a better experience.

In order to make it easier to look for information on this page, I have separated content into separate tabs. Expand the section you'd like to read more about!

Mapping the user's thoughts

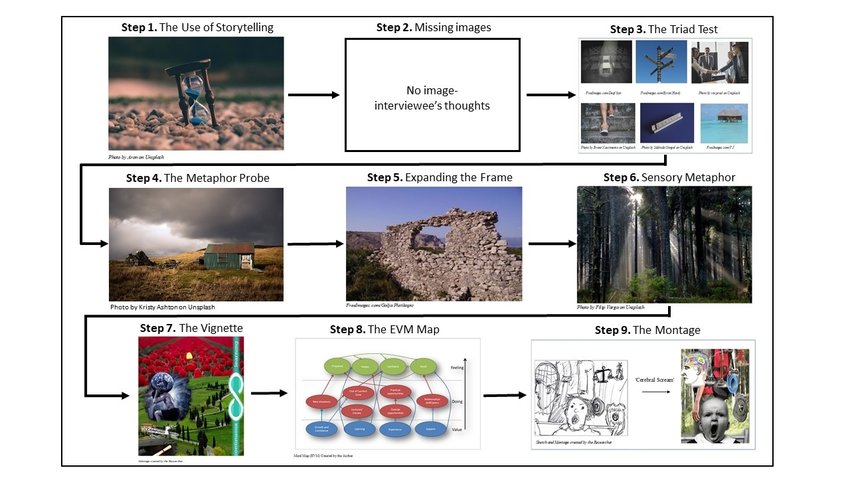

The Zaltman Metaphor Elicitation Technique, or ZMET was one of the first tools I used during the production of our graduation game. The aim of this technique is to map the users' conscious and unconscious thoughts about an aspect of a product or topic of interest. Participants are asked to gather a set of pictures that they feel represents the specified topic or aspect, and through 10 steps we explore why they chose those pictures. Our game, Hold Me Closer revolved around grief, so I gathered a few volunteer participants and carried out the ZMET with them. I combined the findings into a report that was presented to the game director. The aim in this context was to get user expectation about the topic ahead of production start to be able to tailor the visuals and other game elements to the feedback from the ZMET, making the game match user expectations better.

Researching our Target Audience

This was arguably the hardest task, as we weren't given any tools to carry this out, and a lot of databases that would have helped have a hefty subscription fee. This is but an approximation of out possible target users, based on research from various studies and sites. This was carried out in some downtime I had during production. Since this game was not commercially marketed, this report carried no weight but I got to try out something I have never done before.

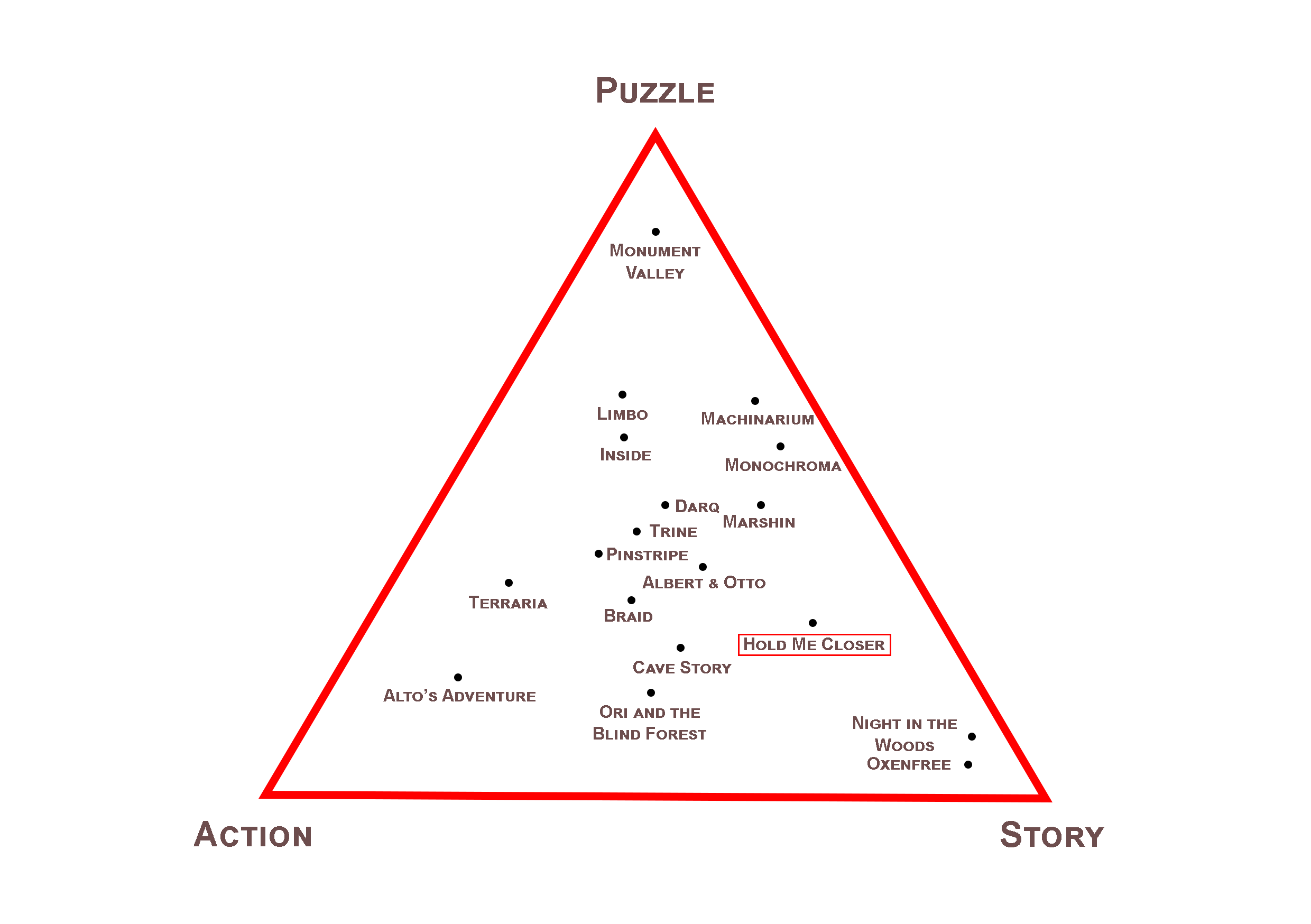

Mapping out our niche

This was a short task, but very enjoyable nonetheless. After the three key aspects of our game were identified, I tried finding games similar to ours and they were combined in a graph with approximations on how those games would be placed in the space regarding how much they matched the individual aspects. This was a lot of guesswork, as the placement of these games was subjective, but the results were based on consensus within the team, so we can expect reasonable accuracy in terms of the overall picture.

While this was just done as a short experiment, I learned more about competition analysis in general, but the use of those methods were not relevant to our project.

The proof of the pudding...

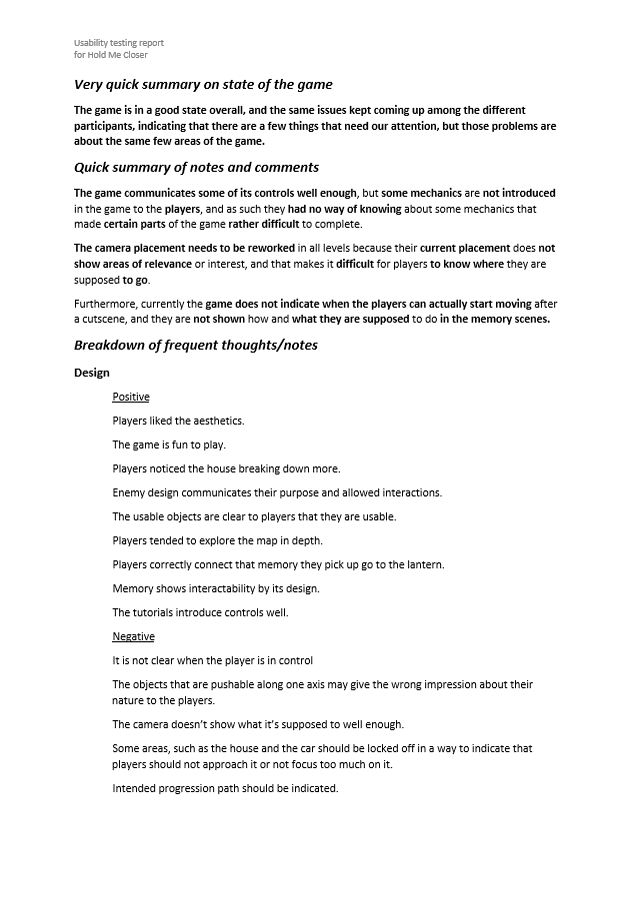

I carried out multiple rounds of user testing throughout the production period. Each round focused on a different aspect. I began with Usability testing, to find any problem areas in the game, such as issues with controls, usability and game mechanics. This was the earliest user test to be carried out, because at that point in production there was ample time to address the problems that users reported.

The next round of user test was Narrative testing. The aim of the narrative test was to see how the playtesters perceive the story and the purpose of the various game elements, like enemies and objects in the environment. The game director wanted to create a game that was up to interpretation, it was somewhat difficult to gauge how it performed on this front, but after tallying the results it seemed that while not every tester was able to guess what the specific game elements represented, they could ballpark their meaning and that indicated successful design. These two aforementioned test were based on player interviews and a think-aloud process, where their thoughts were recoreded as they played the game.

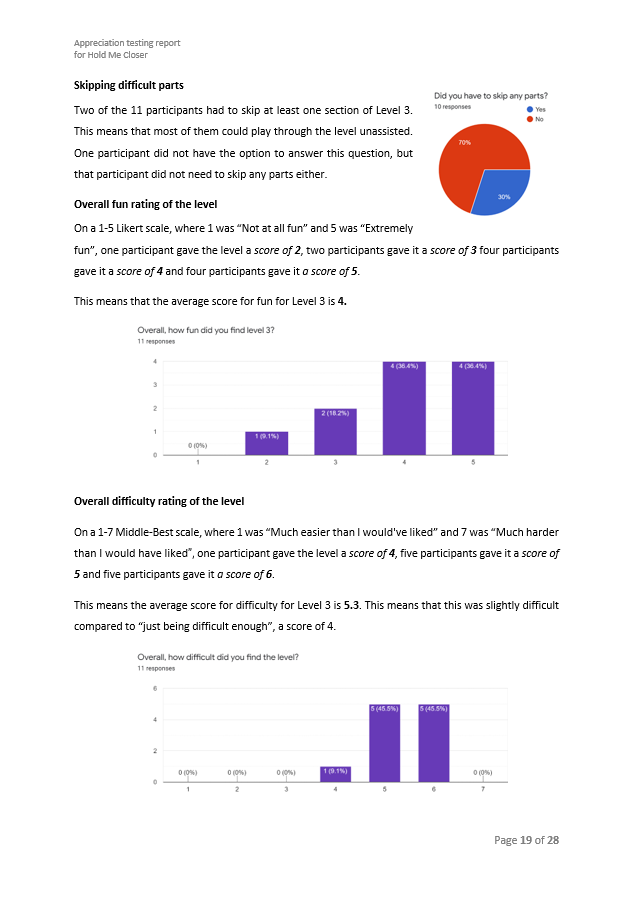

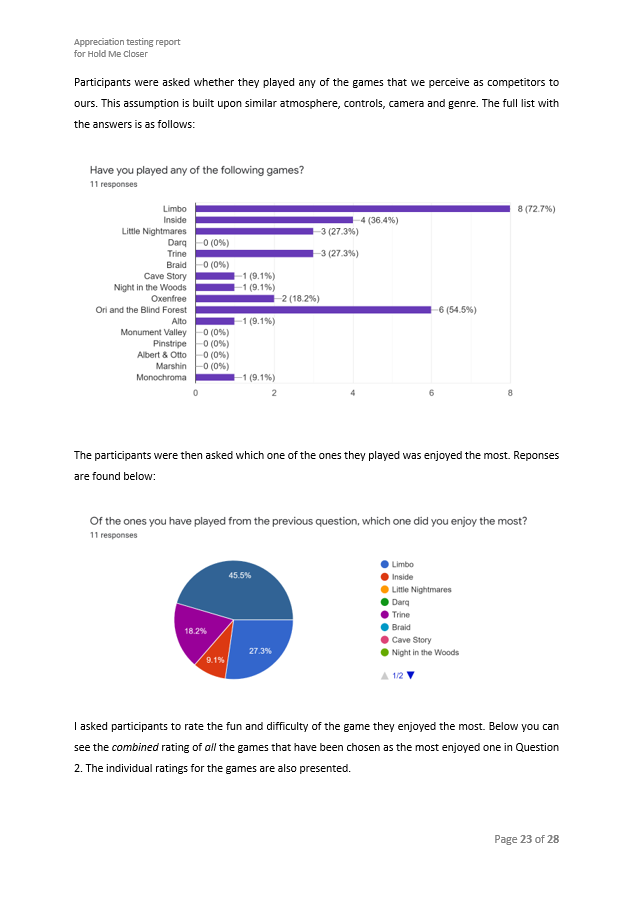

The final round was Apprectiation testing, and this was carried out to see how much the users liked the different parts of the game. This was carried out late in the development process, but with still enough time to make necessary changes to outstanding problem areas. This time, players were asked to complete a survey after completeing each level, and the results were later analyzed. All three tests had their responses combined into a survey that was presented to the lead team.

So many methods, not enough time

During the 3-day User Research workshop, we were introduced to other user research tests, including the Rapid Iterative Testing and Evaluation (RITE), a quick way of adjusting and tuning details of a system between sessions; Icon testing, which is used ato assess whether players understand meaning and functionality of icons. In our game production, this was not carried out separately, but rather formed a part of Usability and Narrative testing. We also covered Concept Art Evaluation and Focus Groups, but these were unused in the development of our graduation game.

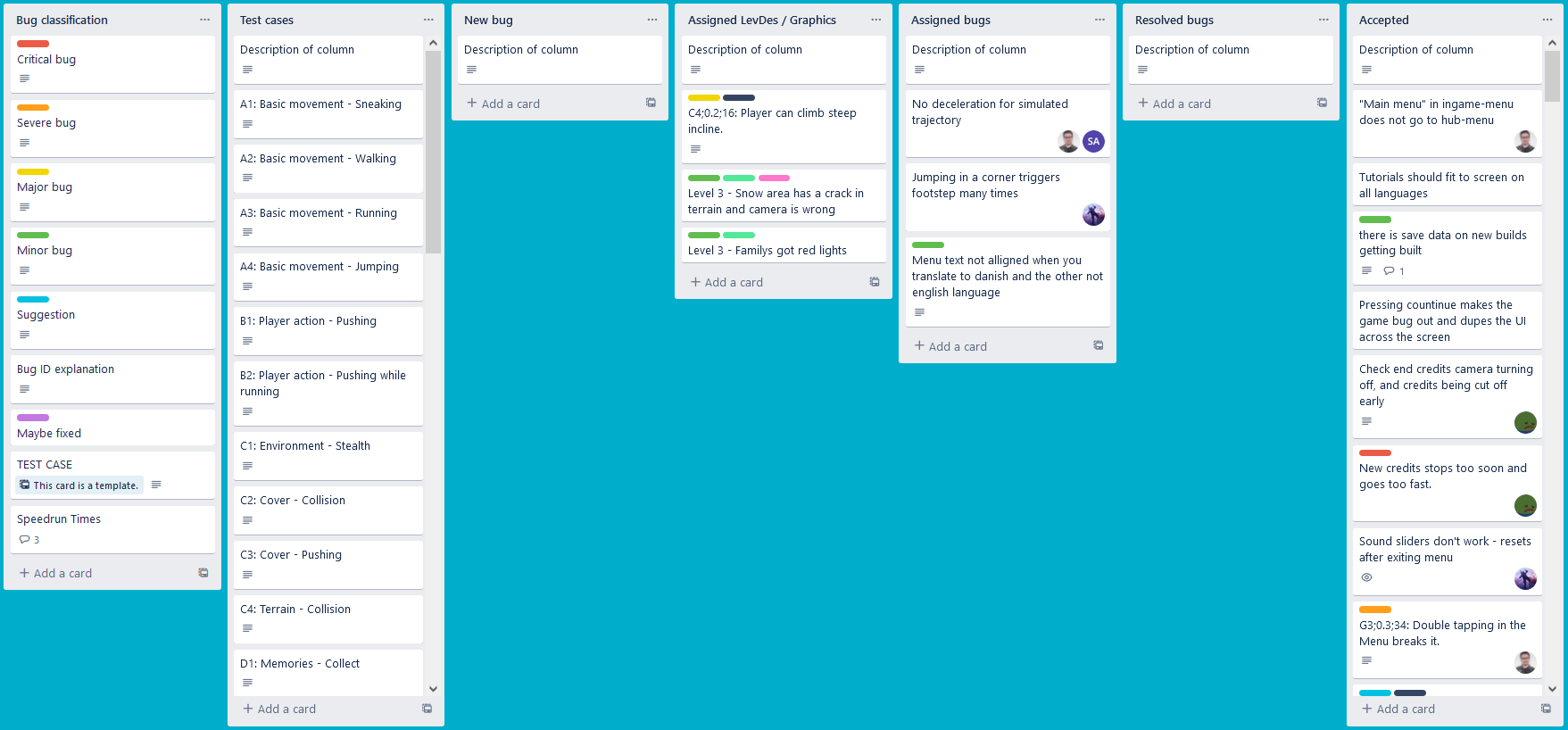

Tracking and managing bugs

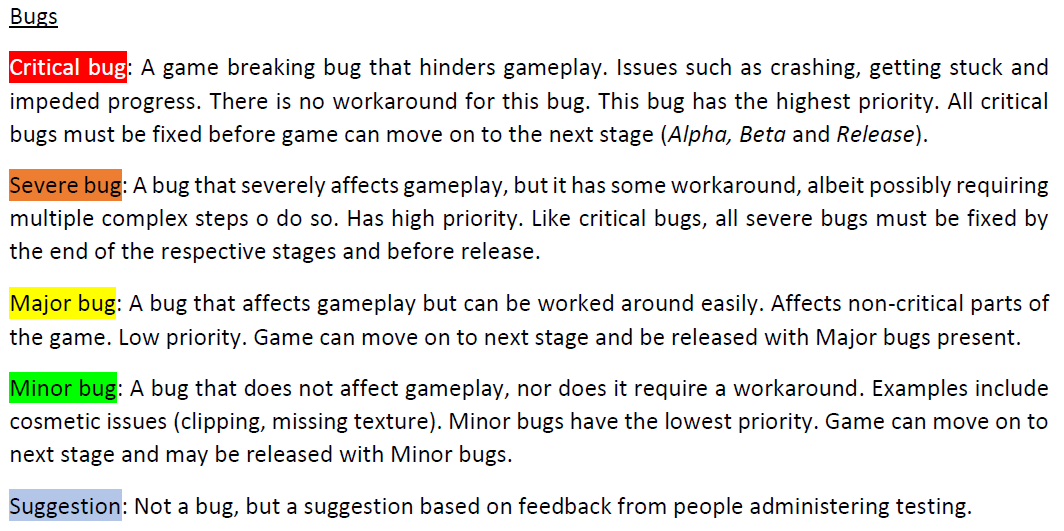

For bug tracking, I used Trello. The types and severities of the bugs were explained in both the Test Plan document and a separate card in the board to make it accessible and understandable for everyone.

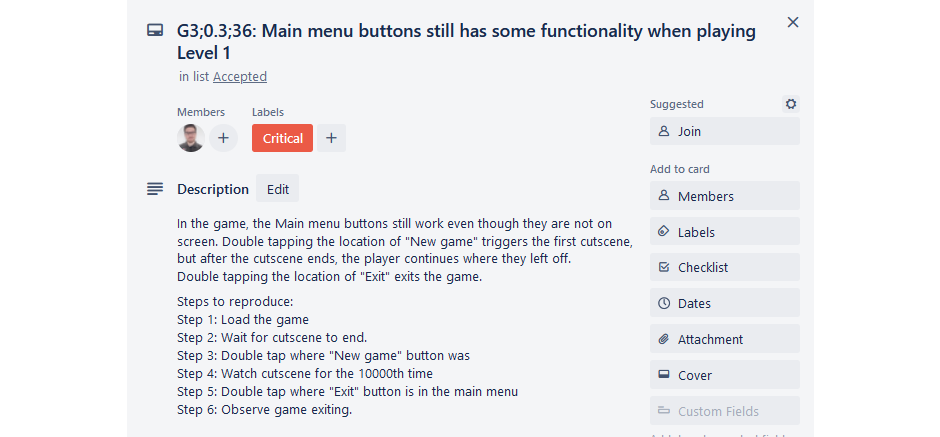

Bugs were given an ID that consisted of the Test Case Number, the Build Number and the Bug Number. This made it easy to understand what the bug pertained to.

Each possible test case were recorded on cards in a separate column on Trello, and this was expanded as new test cases emerged. Test cases were assigned a letter, and subcategories were given numbers. For example Movement was "A", and sneaking was the second subcategory, earning the "A2" designation in the test case library.

Charting our game

The test cases were documented meticulously, in a concise but precise manner, so that they were reproducible by our developers. The bugs were moved along the columns as development on them progressed, finally being marked "Accepted" once they were confirmed to have been removed from the game.

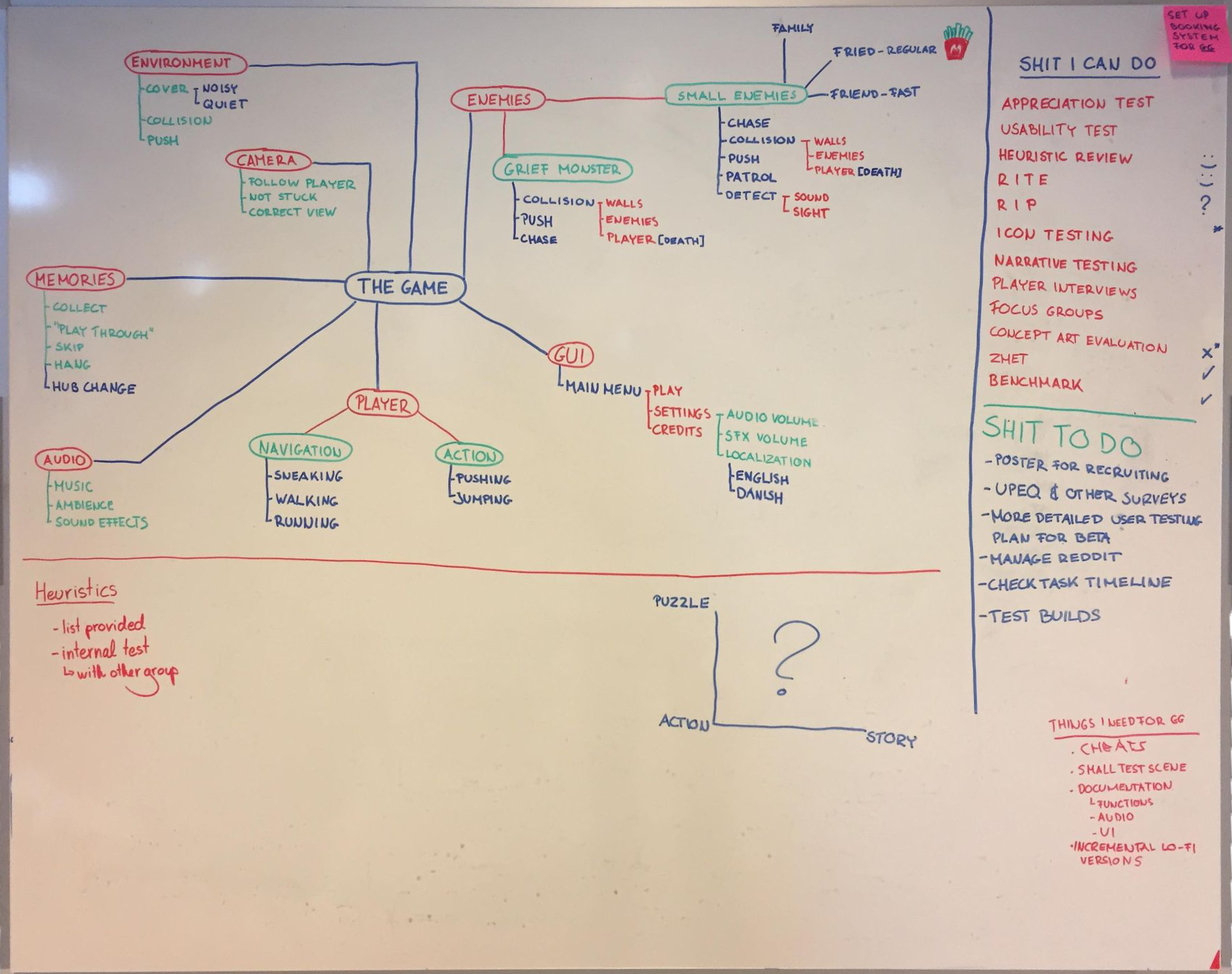

In order to help me build up the test case library, I drew up a chart of the game on a whiteboard and mapped out all possible areas of the game, making it easier to create test cases for bug tracking. You can see this on the image below.

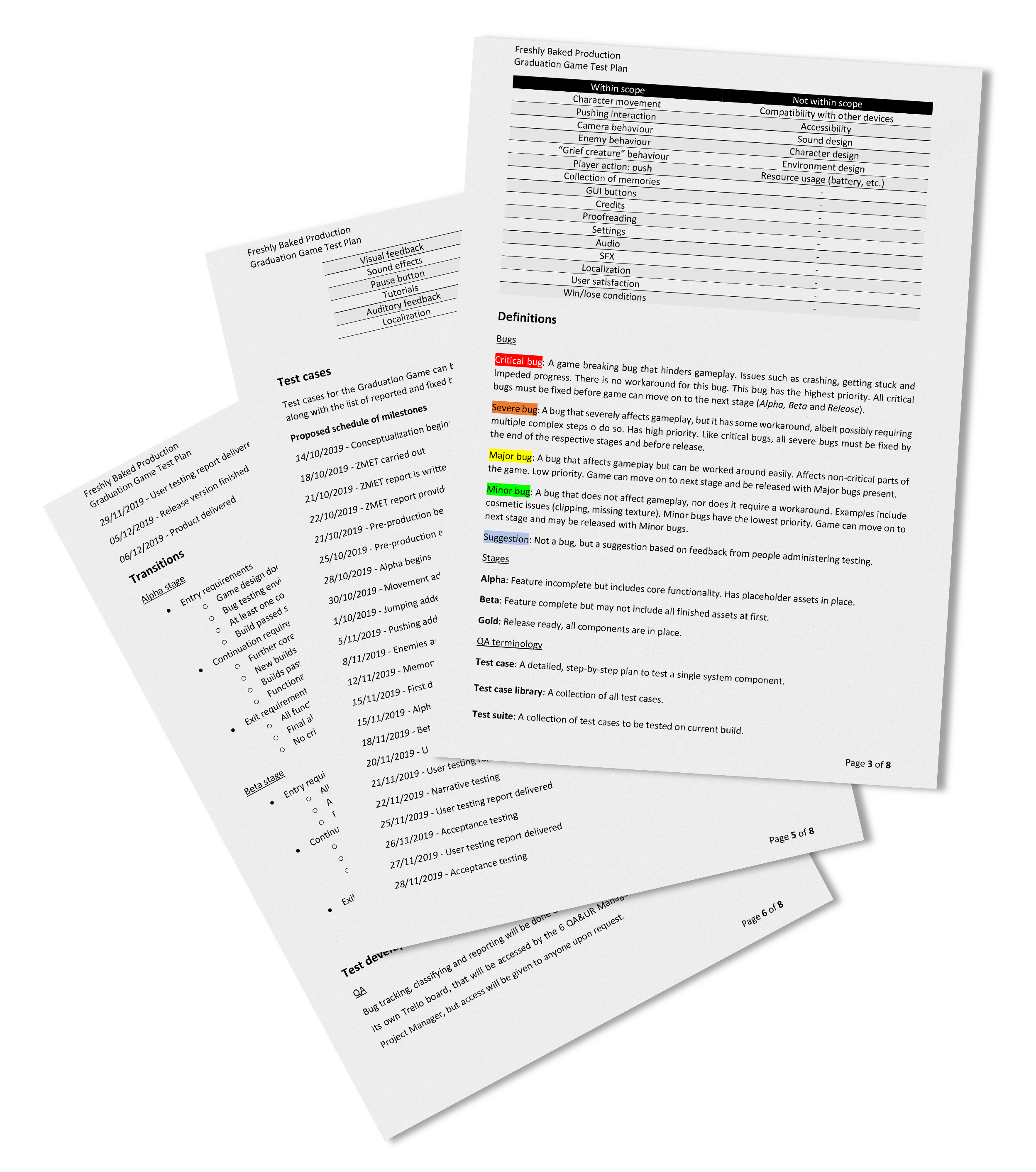

The Test Plan

We were introduced to a few ways we could carry out our QA duties. The first one was creating a detailed test plan. The test plan was an in-depth document detailing all the activities I would be carrying out as QA Manager. It served as a contract between me and the rest of the dev team. It included a list of what would and would not be tested, the definitions of the terminology used in the document, quality risks that may affect the product, a proposed schedule of milestones, test configurations, test development and execution and finally risks and contingencies.

We covered a number of techniques, such as session-based testing, test case-based system, and exploratory testing. Aside from this, I learned about various schools of testing: Analytical, Process-driven, Agile, Context-driven and Modern.

Retrospective and takeaways

Despite of some hardships that came with this experience, the process was great for learning and opening me up to new interests. I got to see how a startup works (and ends), and it provided me with some insight about how I like to organise my work. Even though the company disbanded, I still met up on the remaining days of my virksomhedspraktik with my two colleagues and I spent the time learning about Adobe XD and other best practices in my field.

A key takeaway for me was how important it is to have everything organised and keep a living document on the development of our application. A prototype that can be edited and changed, a technical documentation that can bring everyone up to speed about the app, and how important it is to have someone who can connect developers and users to other stakeholers. I came away from this period with more knowledge than I had when I began, and I couldn't ask for more.